Migrating WebPlatform.org MediaWiki into Git as Markdown files

archiving open-source operations webplatform - 📁 projects

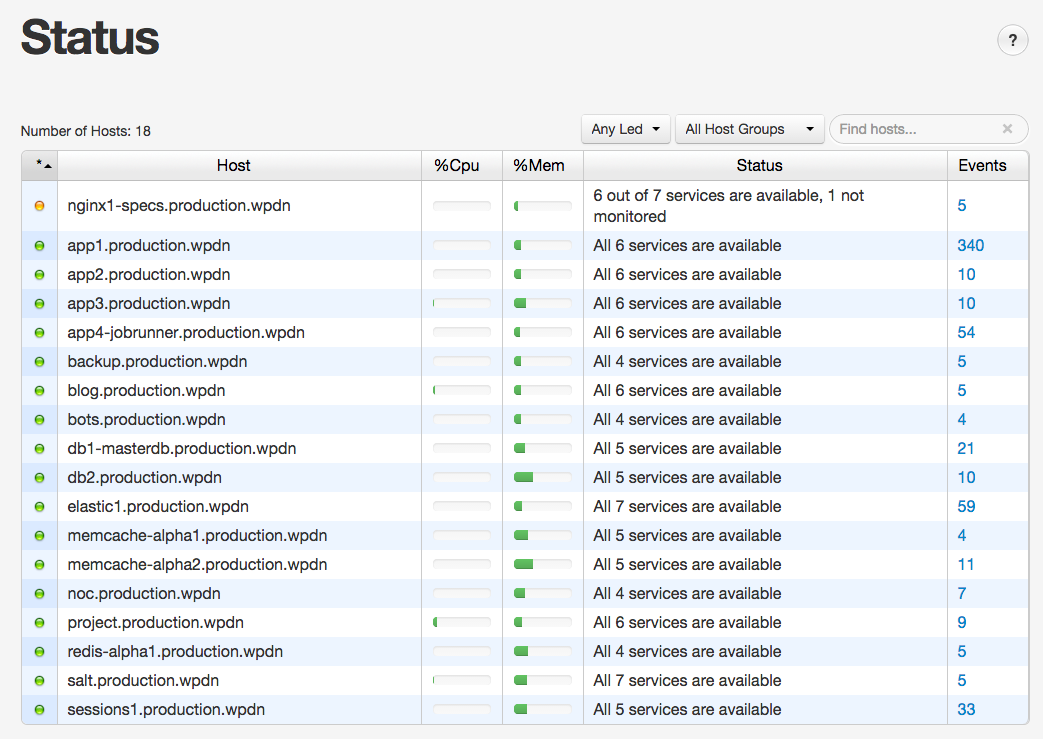

The WebPlatform project was running from a about 20 VMs and the availability, in part, managed by Monit. At the end of the project, the infrastructure "had to go away"

On July 1st 2015, The WebPlatform project has been discontinued, by having its sponsors retracting from the project.

I was hired by the W3C solely to maintain the WebPlatform project. Without funding, and no budget at W3C/MIT to transfer me, my contract was going to end.

With me as the only full-time person on the project with knowledge of the infrastructure, and also as a believer of “don’t break the web”, I wanted to keep everything online.

When I inherited the project, I went through migrating from one cloud provider to another the full infrastructure, it has been running from about 20 Linux VMs, worked on a few projects such as an attempt at having Single-SignOn, and better compatibility tables.

Since the project was being closed, most of what I’ve worked lost their use. The infrastructure was also going to become a burden.

While planning my last weeks, I’ve agreed with the W3C systems team "w3t-systeam" to convert as much as possible as a static site.

The following are notes about the software I’ve written to migrate a WordPress blog and multiple MediaWiki namespaces into a Git repository.

Priorities

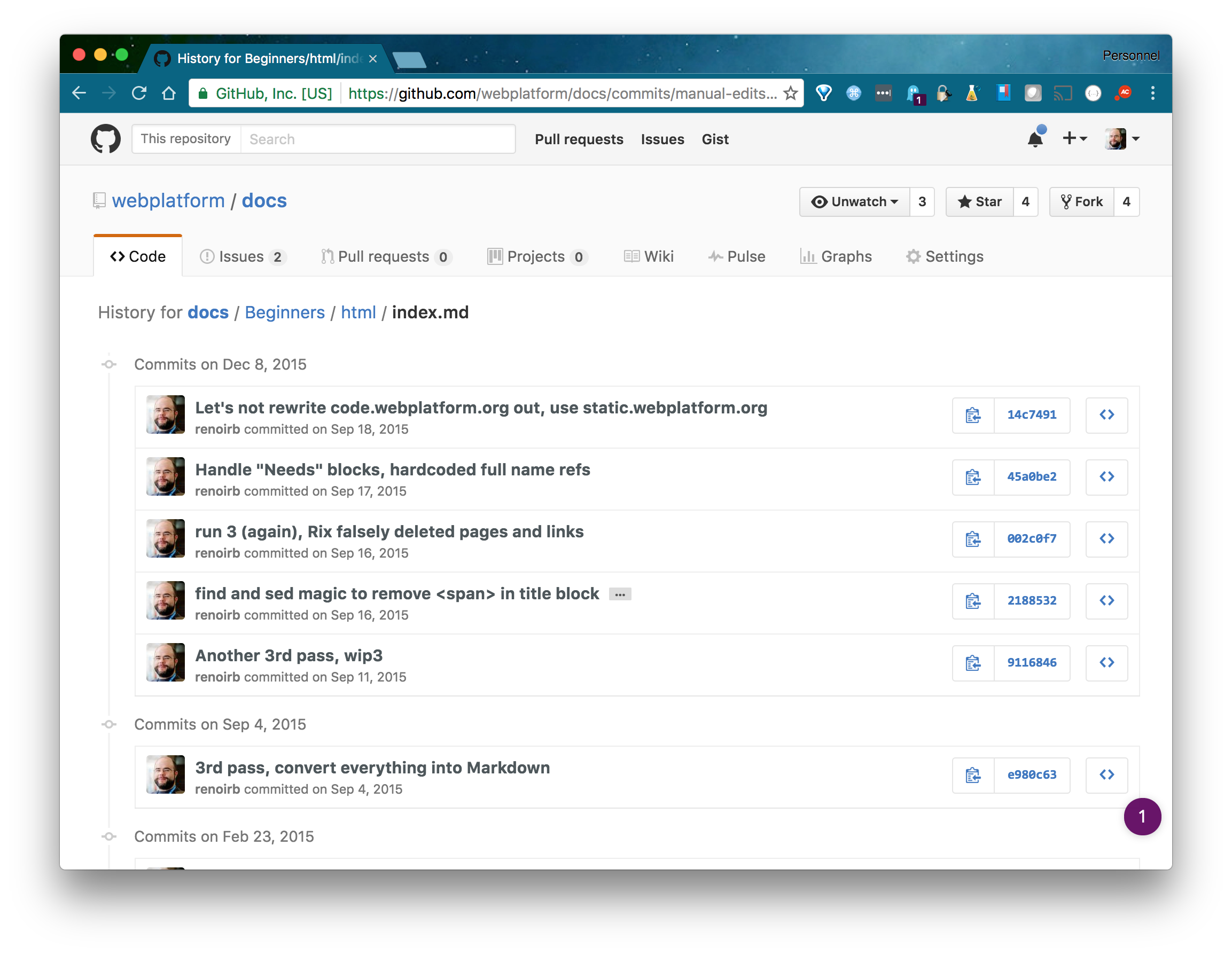

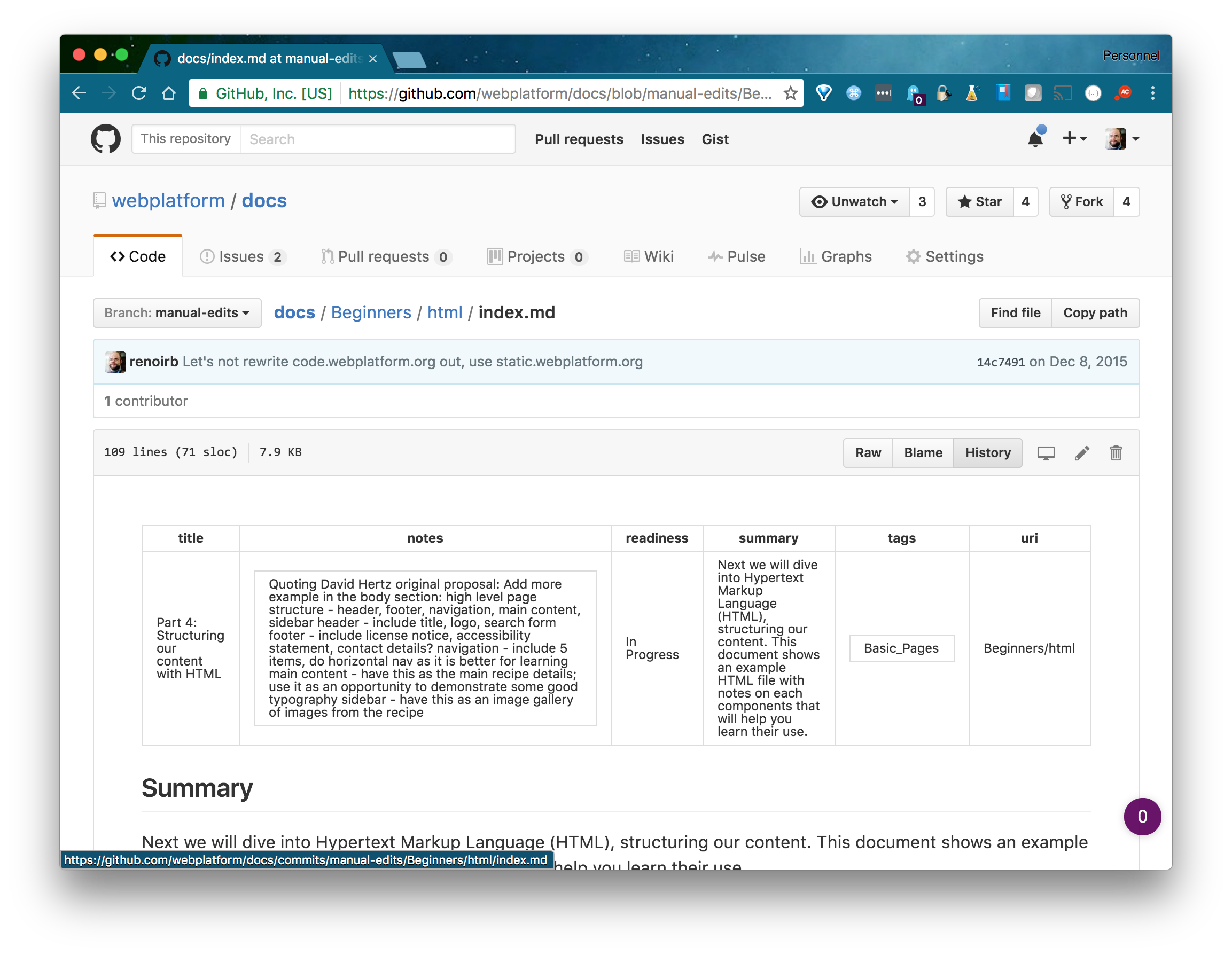

The priorities were to keep the documentation pages up, preserve the contribution history and attributions, also keep the blog contents, and have everything to be served as static HTML hosted on GitHub pages.

I wanted to have this done before I leave the W3C.

Since the SysTeam would keep control of the webplatform.org

domain, they’ve decided to support some redirect from the original domain to

webplatform.github.io.

Once everything was migrated, we’ve added a note;

The WebPlatform project, supported by various stewards between 2012 and 2015, has been discontinued

Source: webplatform.github.io

Outcome

The conversion work took the two last months.

All links to pages showing the WebPlatform logo from this article is the result of what I’m describing here, as the migration and all servers were shut down and decommissioned in 2016.

The source of the site was generated from webplatform/generator-docs, and we’ve created webplatform/webplatform.github.io to host the site on GitHub pages. Eventualy, the W3C SysTeam set the repository as read-only.

There were other things that we’ve initially planned, but couldn’t (see Requirements we couldn’t meet):

Here is what I could migrate

| From | To | Repository | Comment | Commits | Documents | Deleted |

|---|---|---|---|---|---|---|

docs.webplatform.org/wiki/* | webplatform.github.io/docs/* | webplatform/docs | The main docs pages | 37,000 | 4,675 | 418 |

docs.webplatform.org/wiki/Meta:* | webplatform.github.io/docs/Meta/* | webplatform/docs-meta | Archived content that needed to be moved during initial mass imports. | 300 | 58 | 170 |

docs.webplatform.org/wiki/WPD:* | webplatform.github.io/docs/WPD/* | webplatform/docs-wpd | Community and notes section. Example /wiki/WPD:Infrastructure into /docs/WPD/Infrastructure (source) | 5,700 | 358 | 323 |

blog.webplatform.org | webplatform.github.io/blog/* | webplatform/blog | The blog content | 2 | 53 | N/A |

Migration

👀 During this migration project, I was hoping to find a way to take any web page, from any CMS, and get a full copy (including images) of it into Markdown. There was none. In 2017, I’ve created Archivator (npm) to help with such work. Instead of using the last step described here, anyone could use Archivator to retrieve a copy of their web pages.

While building the solution, a few requirements emerged, they’re listed in Requirements

Blog

For migrating the blog, it was made manually, I’ve just converted the HTML into Markdown using Pandoc.

MediaWiki

For migrating MediaWiki, the proces was a bit more complex.

🤲🏼 If you’re looking for an HowTo to migrate your own MediaWiki installation, the procedure is written in ➡️ webplatform/mediawiki-conversion’s README

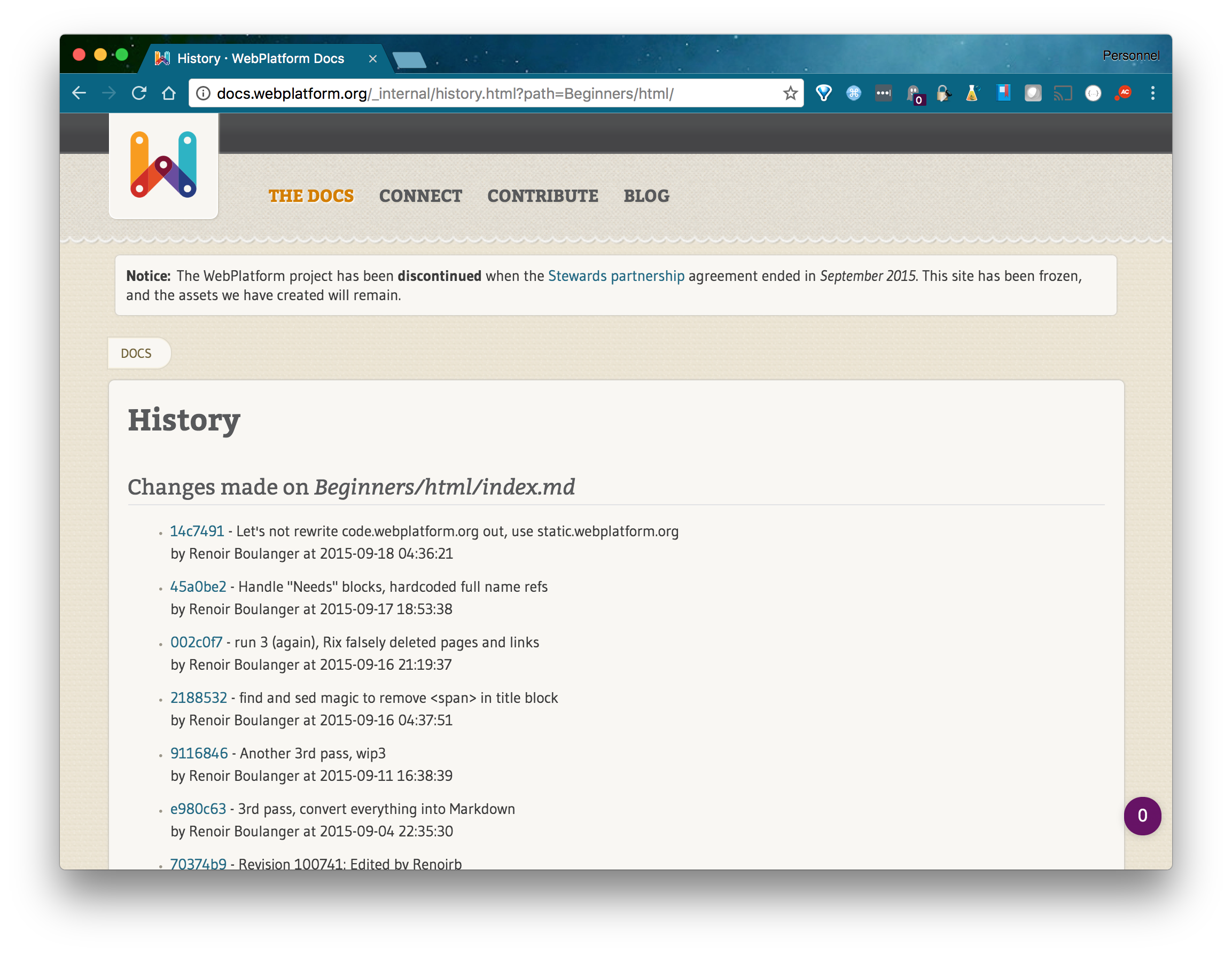

To migrate, we needed an history of each pages, from creation to the last edit.

With MediaWiki, we have Manual:DumpBackup.php, that creates

an XML file ("MediaWiki XML dump") of all history of all pages. One per

namespace, for WebPlatform Docs’ wikis, we had:

/wiki/Meta:*, /wiki/WPD:*,

/wiki/*.

A big part of processing and converting history is about the data model so we could read from one format and use the data model as data-source for conversion.

For this, I’ve created webplatform/content-converter, an abstract library specialized for data manipulation to help "Transform CMS content from a format into another [format]". It’s written in PHP, and might still work even though it hasn’t been touched since 2015.

webplatform/content-converter takes care of manipulating data for:

-

Date of contribution and author information

Content management systems like MediaWiki has a way to tell the user and date of an edition.

-

Take each edit contents as they were

Do not change any of the contents for each edits, even though they are in a format understood by the original engine, here being MediaWiki.

To convert each page history edit into Git commits, I’ve created webplatform/mediawiki-conversion.

This is the utility that takes care of making the conversion see comments made here.

-

Handle deleted pages

For each edit create a git revisions commit until the final file delete. At the end of that step, the Git repository should have an history, but no aparent files. At this step, we can also use the entries and make a redirect map.

-

Handle pages that weren’t deleted in history

Do the same as above. At this step, we should have a repository with text files where each file has exactly the same content as the source history. So we can add them on top of the deleted pages following the same process as above. We can also use those as a list of current pages we’ll want to be converted into Markdown.

-

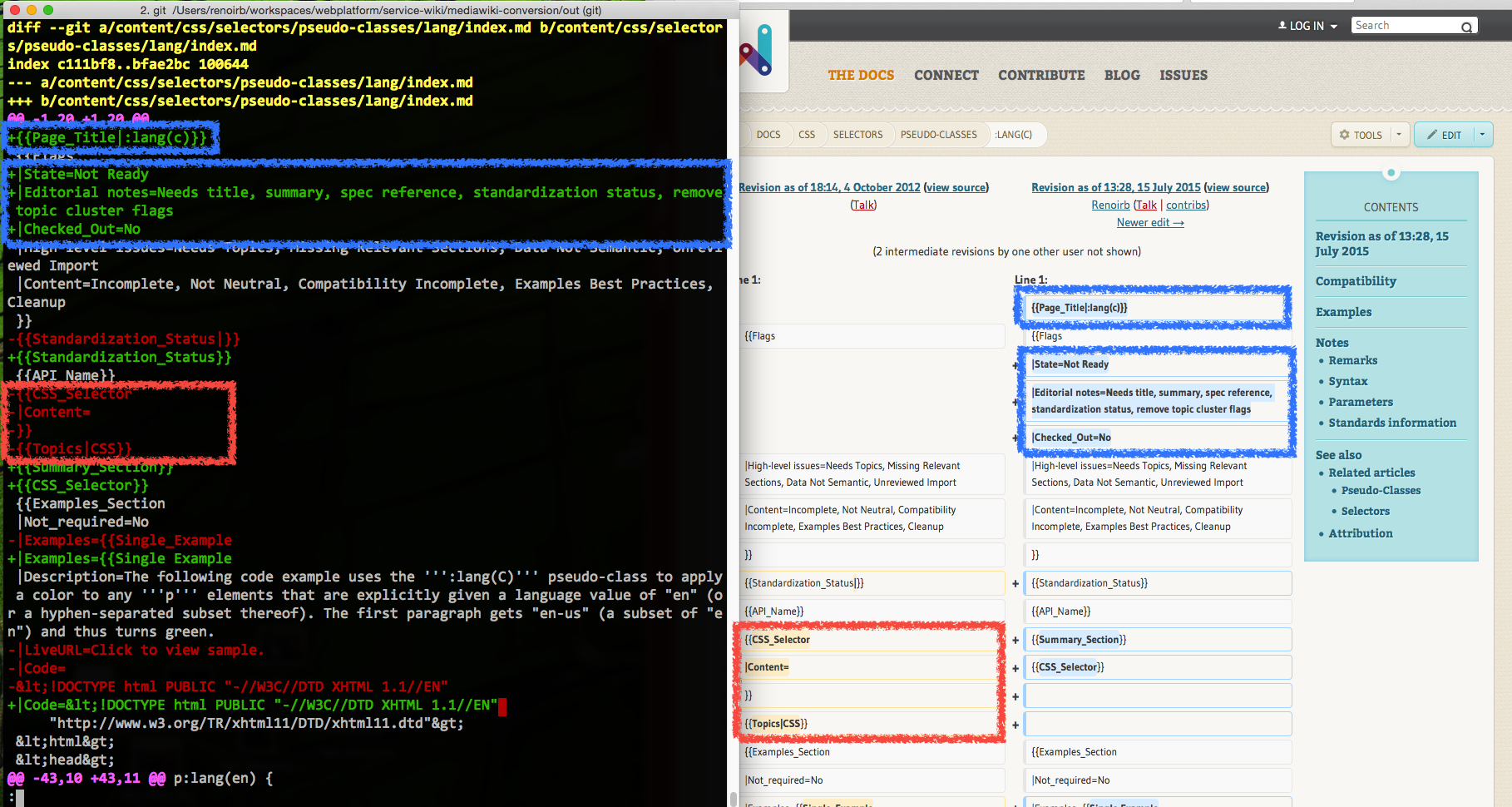

Convert content

For all pages that weren’t deleted in history. Query MediaWiki API to retrieve the full HTML, including Transclusions pass it to Pandoc, so we get it converted into Markdown. Commit the rewritten file contents with Markdown.

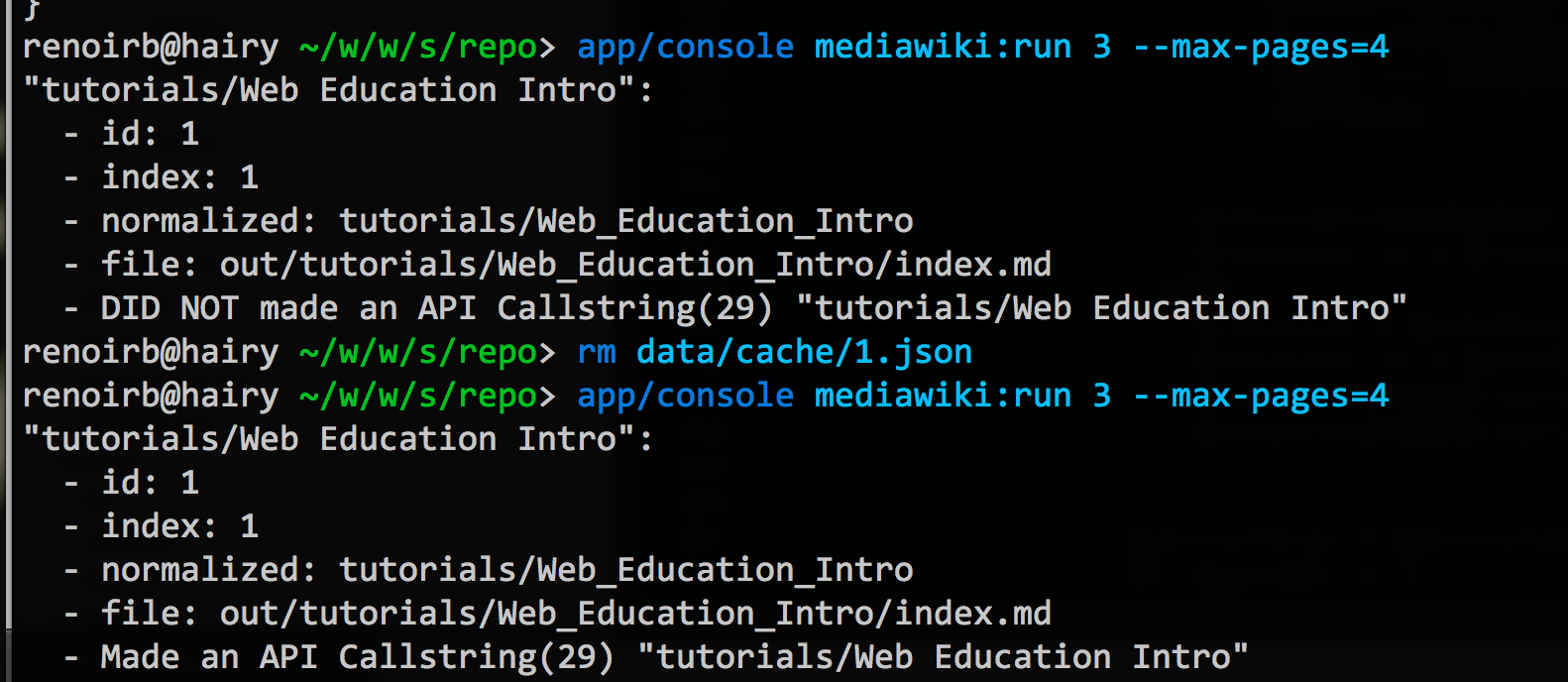

By design webplatform/mediawiki-conversion allows to re-run the 3rd step so we could always get all edits until we Lock the database (i.e. cut-off) so we could keep the production site active until a cut-off date.

Requirements

Along with what's described in the Migration steps, we wanted also to ensure we properly support the following.

-

Keep author attributions but have them anonymized

We didn’t want to leak all contributor’s accounts email addresses to be published without their consent. So, I’ve setup a

.mailmapso that contributors could add a PR to advertize publicly on GitHub. -

Keep code examples in Markdown so we can colourize them

-

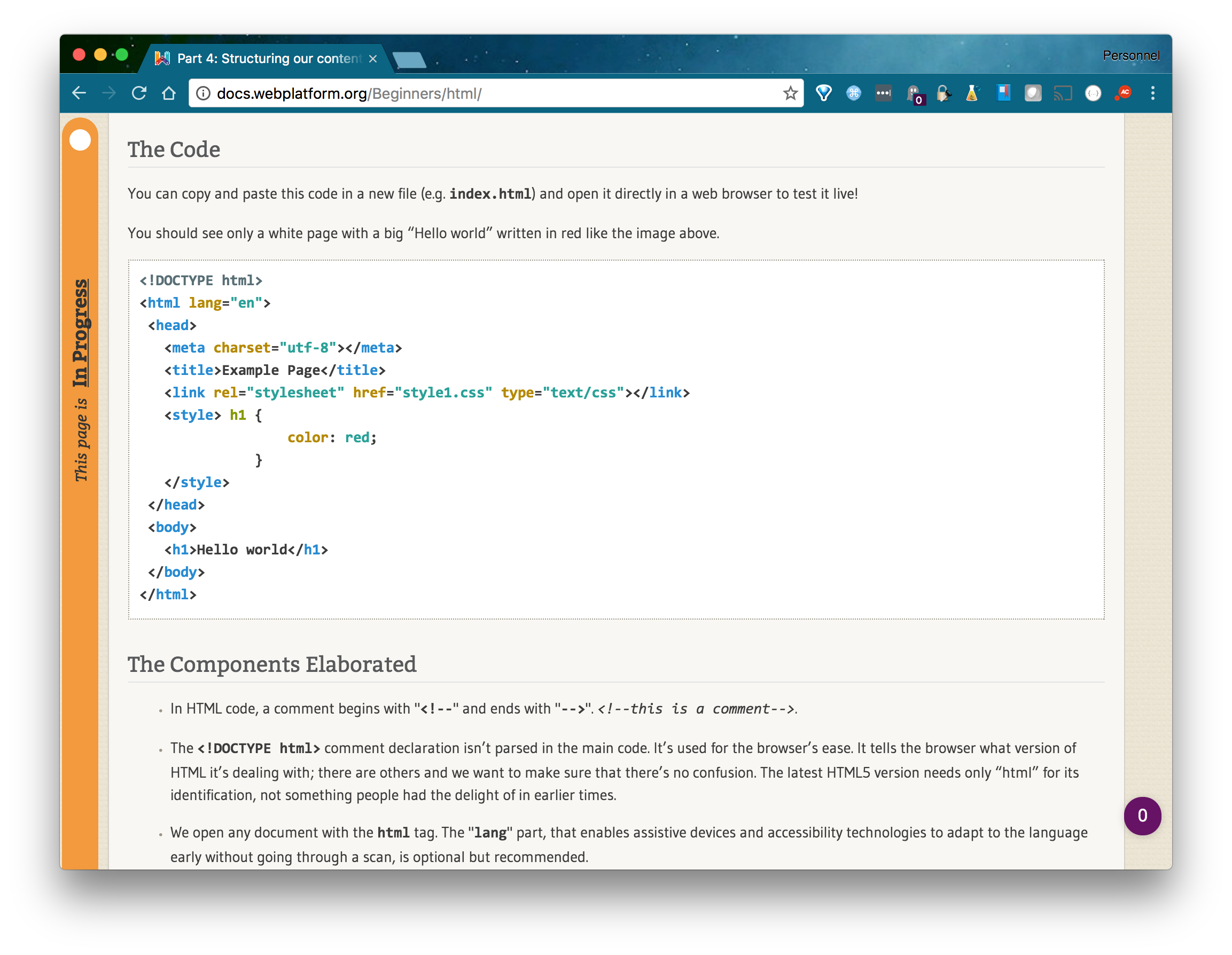

Golden standard pages should look the same

The "Golden Standard" pages were ones we were regularly referring to see how they would look and see if things are broken.

Also, since we were removing infrastructure, we should see features that were enabled at the time of Web.Archive.org snapshot, but would be omitted in the Static version (i.e.

webplatform.github.io)- Compatibility data was removed

- Contents on the right should be removed

- Overview table should look different

- On static version code blocks should be more colorized differently (it's using a different process)

Examples:

- docs.webplatform.org/wiki/css/properties/border-radius to webplatform.github.io/docs/css/properties/border-radius

- docs.webplatform.org/wiki/css/properties/display to webplatform.github.io/docs/css/properties/display

- docs.webplatform.org/wiki/javascript/functions to webplatform.github.io/docs/javascript/functions

- docs.webplatform.org/wiki/javascript/Array/filter to webplatform.github.io/docs/javascript/Array/filter

- docs.webplatform.org/wiki/javascript/Date to webplatform.github.io/docs/javascript/Date

- docs.webplatform.org/wiki/Beginners/html to webplatform.github.io/docs/Beginners/html

- docs.webplatform.org/wiki/tutorials/canvas_notearsgame to webplatform.github.io/docs/tutorials/canvas_notearsgame

- docs.webplatform.org/wiki/tutorials/svg_clipping_and_masking to webplatform.github.io/docs/tutorials/svg_clipping_and_masking

-

Support MediaWiki special URL patterns

MediaWiki is pretty relax about what it allows in its URLs.

Since we’re migrating into a filesystem, we wanted to have only valid filesystem file names and decided to normalize all paths into ASCII characters.

For example, the Namespaces (e.g.

/wiki/WPD:*) adds a column character, that would be URLEncoded into%3AThere were many other discrepancies like:

-

Support MediaWiki special URL patterns: A page in another language should be migrated

-

Support MediaWiki special URL patterns: A Page with

()in URL should be normalized- docs.webplatform.org/wiki/css/functions/translate() to webplatform.github.io/docs/css/functions/translate

- docs.webplatform.org/wiki/svg/properties/animVal_(SVGAnimatedPreserveAspectRatio) to webplatform.github.io/docs/svg/properties/animVal_SVGAnimatedPreserveAspectRatio

- docs.webplatform.org/wiki/svg/properties/cx_(SVGRadialGradientElement) to webplatform.github.io/docs/svg/properties/cx_SVGRadialGradientElement

-

Support MediaWiki special URL patterns: Pages within WPD namespace should be migrated

- docs.webplatform.org/wiki/WPD:Infrastructure/Monitoring/Monit to webplatform.github.io/docs/WPD/Infrastructure/Monitoring/Monit

- docs.webplatform.org/wiki/WPD:Infrastructure/analysis/2013-Migrating_to_a_new_cloud_provider to webplatform.github.io/docs/WPD/Infrastructure/analysis/2013-Migrating_to_a_new_cloud_provider

The image comes from

/WPD/assets/which means the image is hosted in the webplatform/docs-wpd in the assets/ folder. -

Support MediaWiki special URL patterns: Pages in Meta namespace should be migrated

- docs.webplatform.org/wiki/Meta:HTML/Elements/style to webplatform.github.io/docs/Meta/HTML/Elements/style

- docs.webplatform.org/wiki/Meta:web_platform_wednesday to webplatform.github.io/docs/Meta/web_platform_wednesday

- docs.webplatform.org/wiki/Meta:Editors_Guide to webplatform.github.io/docs/Meta/Editors_Guide

Requirements we couldn’t meet

-

Ensure ALL assets uploads are displayed properly

Before, we would use GlusterFS to store images for MediaWiki. There has been work made to store on

static.webplatform.org, all the pages uses the link, but the SysTeam decided not to keep the redirects.Now that the site is archived, I’m unsure this can be fixed.

If you want to see them, add this bookmarklet to your bookmarks bar WebPlatform.github.io Show images

The project http://webplatform.github.io/docs-assets/ was meant to be used as an origin for

static.webplatform.orgShould we want to fix the issue, we could setup an HTTP redirect from

static.webplatform.orgtowebplatform.github.io/docs-assets/The following (should be) the same file;

-

Make sure page links with different casing are redirected properly

For example the page for "

Internet_and_Web" is sometimes linked with different casing, e.g. at "Internet_and_Web/The_History_of_the_Web" or "internet_and_web/The_History_of_the_Web", it is migrated once; docs.webplatform.org/wiki/concepts/Internet_and_Web/The_History_of_the_Web to webplatform.github.io/docs/concepts/Internet_and_Web/The_History_of_the_WebSome page has links to with different casing "

internet_and_web", we can see from docs.webplatform.org/wiki/concepts to webplatform.github.io/docs/conceptsMediaWiki would redirect, but on GitHub pages, nothing has been put in place for those. If we wanted to support, we could have used the same entries used to create NGINX rewrite rules, and add empty HTML pages like GitHub pages would. In the case of WebPlatform project, it's been decided to leave as-is.

-

Enforce all redirects from

*.webplatform.orgto properly redirect towebplatform.github.io.This migration process supported creating NGINX rewrite rules, I’m unsure if they’ve been used.